AD FS identity integration on Azure Stack – filling in the gaps

One of the clients I’ve been engaged with use AD FS as the identity provider for their Azure Stack integrated system. All well and good, as setting that up using the instructions provided here is *fairly* straightforward: https://docs.microsoft.com/en-us/azure/azure-stack/azure-stack-integrate-identity. Here’s a high level of the tasks that need to be performed:

One of the clients I’ve been engaged with use AD FS as the identity provider for their Azure Stack integrated system. All well and good, as setting that up using the instructions provided here is *fairly* straightforward: https://docs.microsoft.com/en-us/azure/azure-stack/azure-stack-integrate-identity. Here’s a high level of the tasks that need to be performed:

On Azure Stack (by the operator via Privileged Endpoint PowerShell session):

Setup Graph integration (configure to point to on-premises AD Domain, so user / group searches can be performed, used by IAM/RBAC)

Setup AD FS integration (Create federation data metafile and use automation to configure claims provider trust with on-premises AD FS)

Set Service Admin Owner to user in the on-premises AD Domain

On customer AD FS server by an admin with correct permissions:

Configure claims provider trust (either by helper script provided in Azure Stack tools, or manually)

If performing manually:

Enable Windows forms-based authentication

Add the relying party trust

Configure AD FS properties to ignore token ring bindings (If using IE / Edge browsers and AD FS is running on WS 2016)

Set AD FS Relying party trust with Token lifetime of 1440

If performing by helper script:

From Azure Stack tools directory, navigate to \DatacenterIntegration\Identity and run setupadfs.ps1

The only gotcha with the instructions that I encountered was that the certificate chain for AD FS was different than was provisioned for Azure Stack endpoints, so I tried to follow the instructions for this scenario provided in the link above, but they didn’t work.

It turns out that there was a problem with me running the provided PowerShell code:

[XML]$Metadata = Invoke-WebRequest -URI https:///federationmetadata/2007-06/federationmetadata.xml -UseBasicParsing

$Metadata.outerxml|out-file c:\metadata.xml

$federationMetadataFileContent = get-content c:\metadata.cml

$creds=Get-Credential

Enter-PSSession -ComputerName -ConfigurationName PrivilegedEndpoint -Credential $creds

Register-CustomAdfs -CustomAdfsName Contoso -CustomADFSFederationMetadataFileContent $using:federationMetadataFileContent

…and here’s one that is correctly configured:

The things to check for are that the correct FQDN to the AD domain are provided and the user / password combination is correct. Graph just needs a ‘normal’ user account with no special permissions. Make sure the password for the user is set to not expire!

You can re-run the command from the PEP without having to run Reset-DatacenterIntegationConfiguration. Only run this when AD FS integration is broken.

If you want to use AD Groups via Graph for RBAC control, keep in mind that they need to be Universal, otherwise they will not appear.

Hopefully this information will help some of you out.

In my next blog post, I’ll fill in the gaps on creating AD FS SPN’s for use by automation / Azure CLI on Azure Stack.

Azure to Azure Stack site-to-site IPSec VPN tunnel failure... after 8 hours

We had a need to create a site-to-site VPN tunnel for a POC from Azure Stack to Azure. It seemed pretty straight forward. Spoiler alert, obviously I'm writing this because it wasn't. The tunnel was created okay, but each morning it would no longer allow traffic to travel across it. The tunnel would show connected in Azure and in Azure Stack but traffic just wouldn't flow; ping, SSH, RDP, DNS and AD all wouldn't work. After some tinkering we found we would have to change the connection's sharedkey value to something random, save it, then change it back to the correct key. This only worked from the Azure Stack side of the connection, to re-initiate successfully and allow traffic to flow again (or recreate the connection from scratch). It would work for another 8 hours and then fail to pass traffic again.

My suspicion was the re-keying, as this would explain why it worked at first and would fail the next day (everyday, for the last 5 days). I tried using VPN diagnostics on the Azure side, as they don't currently support VPN diagnostics on Azure Stack (we are on update 1805). After reviewing the IKE log there were some errors, but it was hard to find something to tell me what was going wrong, more specifically something I could do to fix it. Below is the IKE log file I collected through the VPN diagnostics from Azure.

I logged a case with Microsoft support. The first support person did their best. While Microsoft can identify the endpoints they are connecting to, from Azure, they do not have permission to dig any deeper and look into the contents of our subscriptions hosted on Azure Stack. I was asked to change the local VPN gateways from specific subnets to be the entire vnet address space. While it worked initially, again it failed after 8 hours.

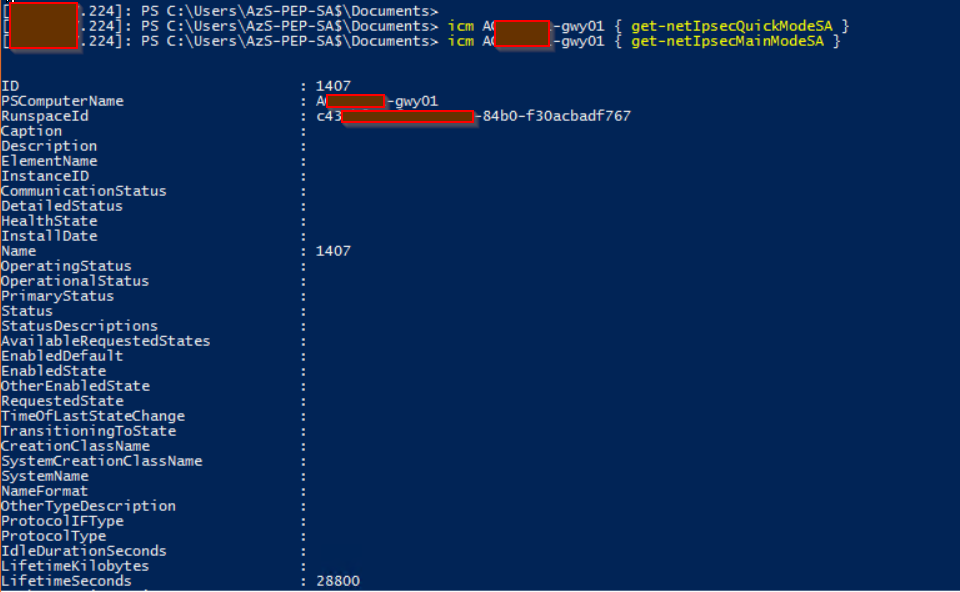

The support engineer collected some network traffic and other logs and forwarded the case to an Azure Stack support engineer. Once the call was assigned they asked me to connect to the privileged endpoint (PEP) and we proceeded with breaking the glass to Azure Stack to troubleshoot. The engineer gave me a few PowerShell commands to run to investigate what was going on.

#First find out which of the VPN gateways is active. icm Azs-gwy01,Azs-gwy02 { get-vpns2sinterface }

#Check Quick Mode Key Exchange icm Azs-gwy01 { get-netIpsecQuickModeSA }

#Check Main Mode Key Exchange icm Azs-gwy01 { get-netIpsecMainModeSA }

The Microsoft engineer had a hunch of exactly what he was looking for and was on point. The commands showed that the Quick mode key exchange had failed to complete the refresh, yet the Main Mode had succeeded. This explained why the tunnel was up but no traffic could flow across it.

We rebooted the active VPN gateway so the tunnels would fail-over to the second gateway. Logging was on by default so we just had to wait for the next timeout to occur. When it did I was given the task of collecting and uploading the logs from the PEP.

These logs are a series of ETL files that need to be processed by Microsoft to make sense of them. Fortunately it turned up the following log entries.

As commented above, the root cause was that the PFS and CipherType setting were incorrect on the Azure VPN gateway. I was given a few PowerShell commands to run against the Azure Subscription to reconfigure the IPSec policy for the connection on the Azure side to match the policy of the VPN gateway and connection on Azure Stack.

$RG1 = 'RESOURCE GROUP NAME' $CONN = 'CONNECTION NAME' $GWYCONN = Get-AzureRmVirtualNetworkGatewayConnection -Name $CONN -ResourceGroupName $RG1 $newpolicy = New-AzureRmIpsecPolicy -IkeEncryption AES256 -IkeIntegrity SHA256 -DhGroup DHGroup2 -IpsecEncryption GCMAES256 -IpsecIntegrity GCMAES256 -PfsGroup PFS2048 -SALifeTimeSeconds 27000 -SADataSizeKilobytes 33553408 Set-AzureRmVirtualNetworkGatewayConnection -VirtualNetworkGatewayConnection $GWYCONN -IpsecPolicies $newpolicy

Almost there. When I tried to run the command, the basic Sku doesn't allow for custom IPSec policies. Once I changed the Sku from basic to standard the command worked and the tunnel has been up and stable.

While this is any easy fix that anyone can run against their Azure subscription without opening a support ticket, this does incur a cost difference. Hopefully in the future these policies will match out-of-the-box between Azure and Azure Stack so every consumer can use the basic VPN Sku to connect Azure Stack to Azure over a secure tunnel.

WALinuxAgent issue in a Linux VM on Azure Stack

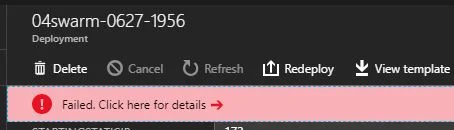

I was deploying some Linux RHEL systems yesterday on Azure Stack. Everything was working and then it wasn't. The VM had deployed fine but the custom script extension had failed. It takes 2 hours for the deployment to timeout. Deployment Failure Timeout

I was deploying some Linux RHEL systems yesterday on Azure Stack. Everything was working and then it wasn't. The VM had deployed fine but the custom script extension had failed. It takes 2 hours for the deployment to timeout. Deployment Failure Timeout

Failed Script Extension

Failed Extensions Details

If you cancel the deployment and attempt to delete the resource group you will have to wait for the custom extension to timeout anyway, which takes two hours. Microsoft recommends against canceling deployments and let them timeout, especially on Linux. On the next deployment attempt, I got the same results. On closer inspection of the stdout for custom script extension 2.0.6 (/var/lib/waagent/custom-script/download/0/stdout), I found this error. warning: %postun(WALinuxAgent-2.2.18-1.el7.noarch) scriptlet failed, signal 15

Stdout log

Reviewing the bash script, the first line that was running was

yum -y clean all && yum -y clean metadata && yum -y clean dbcache && yum -y makecache && yum update -yvq

This appears to be a known issue, here is something someone has written with some information trying to explain what is happening https://github.com/Azure/WALinuxAgent/issues/178

I believe this explains the timeout. The Agent had stopped and Azure Stack can no longer talk to the agent in the VM. If you experience this specific issue adding an exclusion should leave the existing binaries alone. If you don't have this specific error but are still experiencing a timeout it is probably worth checking the WALinuxAgent is still running.

yum update -y --exclude=WALinuxAgent

Azure Stack Network Security Considerations

We’ve been playing with Azure Stack for quite a while now and its pretty easy to forget you’re not using the real Azure. But there are a few key differences that you should always keep in mind when deploying your applications on to Azure Stack; with one of the biggest being around Network Security.

We’ve been playing with Azure Stack for quite a while now and its pretty easy to forget you’re not using the real Azure. But there are a few key differences that you should always keep in mind when deploying your applications on to Azure Stack; with one of the biggest being around Network Security.

The above diagram is taken from Microsoft Azure’s Best Practices Guide for network security. It shows the various layers of security Azure provides to its customers, both native to the platform itself and configurable by the customer. The current version of Microsoft Azure Stack implements most of these already; Public IPs, Virtual Network Isolation, NSG & UDR and (some) Network Virtual Appliances are already fully implemented within the RTM version of Azure Stack.

At the internet boundary of Azure, Microsoft implements a range of standard network security protections, including the DDoS protection shown in the diagram above but also intelligent services that block known bad actors. These protection services are provided to all Azure customers as Microsoft needs to ensure the availability and stability of their network. Azure Stack however is deployed into your own data centre, which means it sits behind your internet access point. This means you are responsible for providing protection at the internet boundary.

Deploying Azure Stack in your environment can be done in two models, connected and disconnected. In a disconnected model, the tenant workloads within the Azure Stack instance aren’t going to be on the internet. But if your Azure Stack instance is in a connected model, and your Public IP Address range are actual public IPv4 addresses, then this Azure Stack instance looks and feels very much like the real Azure. In this case, treating the security of applications deployed to Azure Stack just like the applications you deploy to the real Azure is vitally important.

Here are some recommendations for workloads deployed to Azure Stack:

Follow good practice regarding user accounts and passwords; including changing the default passwords, setting strong passwords, and utilise certificates where possible.

Utilise subnets or virtual networks to segregate internet facing component from protected components.

Ensure communication to and from the backend, protected or even on-premises network cannot go directly to the internet without going through a firewall or security appliance (more on this below).

Utilise NSGs to minimise the network surfaces exposed to the internet.

Don’t have common management ports open to the internet such as RDP or SSH

If you do need to allow these ports for external management, consider deploying a jump server to reduce the attack surface or using a point-to-site VPN connection to the VNet hosting the resources.

Consider deploying a NVA such as a next gen firewall. Use UDR’s to ensure egress internet traffic goes via this appliance so you have better control and visibility of what your IaaS VM’s can reach out to, such as SaaS based services like OMS.

Patch your IaaS VM’s – make sure critical patches are applied ASAP to reduce risk of breach

For Windows workloads, deploy Anti-malware protection such as Defender. This is available as an extension in Azure Stack is free and easy to deploy, so there’s no excuse not to!

Harden your IaaS VM’s. Use the best practise available from https://www.cisecurity.org/cis-benchmarks/

Of course, the above guidance trusts that the customers who subscribe to your Azure Stack offers are suitably aware. If you are a CSP operating a multi-tenant Azure Stack platform, although you can control resources to a degree through Plans, Offers and Quotas that customers can consume, you can’t control what they do within their IaaS VM’s. If they deployed a VM with a public IP, has root/administrator enabled and sets a weak password, you can be sure that that will get breached pretty quickly, potentially participating in a botnet and gobbling up your internet bandwidth. This is where you need to have edge security in place, external to the stamp to mitigate these risks.

Consider the following measures:

Firewall: enforcing firewall rules or access control policies for ingress/egress communication to the external IP address space assigned to Azure Stack.

IDS or IPS– Intrusion Defence System or Intrusion Prevention System: assessing packets against known issues and attacks and creating alerts or executing real-time responses to attacks and violations.

Run regular vulnerability scans against the external IP address space assigned to Azure Stack. Identify at the earliest opportunity where a tenant may be exposed to being breached.

Auditing and Logging: maintain detailed logs for auditing and analysis.

Reverse Proxy: redirecting incoming requests to the corresponding back-end servers.

Forward Proxy: Providing NAT and auditing communication initiated from within the network to the internet. Note: The Azure Stack infrastructure only supports a transparent proxy at this stage, but workloads within Azure Stack can utilise a configurable proxy.

Updating the previous diagram and applying it to Azure Stack, you can see where the various security layers could/should be applied:

Finally, there is no ‘one size fits all’ solution to this issue when implementing network security for Azure Stack within your data centre. Factors such as Scale, Price, Certification and Regulation can all factor into a solution but the recommendations above should provide some guidance.

"....or something" he said. (PowerShell sound & speech)

Tomorrow I am on holiday and have been handing a few things over to my colleague. One task involved a PowerShell script for monitoring PowerShell jobs. He said it would be good if there was an email notification or something when the jobs had finished. email notification... boring... Or something you say... hmmm

Tomorrow I am on holiday and have been handing a few things over to my colleague. One task involved a PowerShell script for monitoring PowerShell jobs. He said it would be good if there was an email notification or something when the jobs had finished. email notification... boring... Or something you say... hmmm

How about one of those PowerShell songs, when the jobs are finished. I thought about it and realized this was a perfect match to his specification "or something". With some internet searching, a like bit of copy and paste, there is now a SoundsClips PowerShell module in the PSGallery with a few sound clips to help notify users when jobs finish.

install-module soundclips import-module soundclips

Get-SoundClipMissionImpossible Get-SoundClipCloseEncounter Get-SoundClipImperialMarch Get-SoundClipTetris Get-SoundClipMario Get-SoundClipHappyBirthday

While searching for sound clips, I happened across some text to speech sample code. The gears started to turn. A few minutes later... (I'm sure to the joy of my colleague) it was working. I think all my PowerShell script messages from now on will include sound clips and speech.

Get-SpeakArray @('congratulations','you have successfully deployed stuff')

Get-SpeakWhatAreYouDoing

$voice = Get-SpeakInstalledVoices | select -Last 1 Get-SpeakArray @('you call that a parameter?','You are so close, dont give up now') -voicename $voice

I am sure you can see the value add here, with text to speech, priceless. I am willing to guarantee there will be more snippets and functions added to this little gem. But for now, it's time for a holiday. I hope someone else might find this module as entertaining as I have. If you have some PowerShell sound scripts you want to be included, please don't hesitate to share below.

Topic Search

Posts by Date

- August 2025 1

- March 2025 1

- February 2025 1

- October 2024 1

- August 2024 1

- July 2024 1

- October 2023 1

- September 2023 1

- August 2023 3

- July 2023 1

- June 2023 2

- May 2023 1

- February 2023 3

- January 2023 1

- December 2022 1

- November 2022 3

- October 2022 7

- September 2022 2

- August 2022 4

- July 2022 1

- February 2022 2

- January 2022 1

- October 2021 1

- June 2021 2

- February 2021 1

- December 2020 2

- November 2020 2

- October 2020 1

- September 2020 1

- August 2020 1

- June 2020 1

- May 2020 2

- March 2020 1

- January 2020 2

- December 2019 2

- November 2019 1

- October 2019 7

- June 2019 2

- March 2019 2

- February 2019 1

- December 2018 3

- November 2018 1

- October 2018 4

- September 2018 6

- August 2018 1

- June 2018 1

- April 2018 2

- March 2018 1

- February 2018 3

- January 2018 2

- August 2017 5

- June 2017 2

- May 2017 3

- March 2017 4

- February 2017 4

- December 2016 1

- November 2016 3

- October 2016 3

- September 2016 5

- August 2016 11

- July 2016 13