Retrieving AKS on Azure Local admin credentials

I was asked a question recently how could a customer connect to an Azure Local hosted AKS instance via kubectl, so they could use locally hosted Argo CD pipelines to manage their containerized workloads (e.g. via Helm charts). The reason for the question is that the Microsoft documentation doesn’t make it clear how to do it. E.g. The How To Create a Kubernetes Cluster using Azure CLI shows that once you’ve created a cluster, you use the az connectedk8s proxy azure cli command to create a proxy connection allowing kubectl commands to be run.

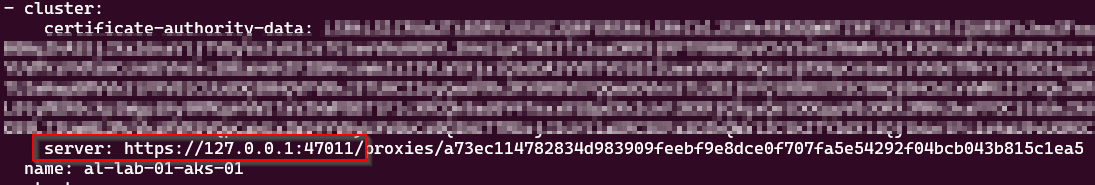

That’s fine if you’re doing interactive sessions, such as running the az cli proxy command in one shell, and in another running kubectl, but once the proxy session is closed, the kube config is useless, as it looks something like this:

If you try and run a kubectl when the proxy session is closed, it will fail. Editing the config file with the URL with one of the IP addresses of the control nodes and port 6443 doesn’t work either as the client certificate is not provided.

To get the correct config we have 3 options:

Use Azure CLI to retrieve the admin credentials

SSH to one of the AKS control-plane nodes and retrieve the admin.conf file

Run a debug container on one of the control-plane nodes and retrieve the admin.conf file

I’ll go through all 3 options, starting with the easiest

Using Azure CLI

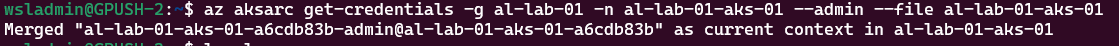

Assuming you’re already logged in to the correct Azure tenant, you can use a single command to get the admin credentials:

az aksarc get-credentials --resource-group <myResourceGroup> --name <myAKSCluster> --admin --file <myclusterconf>A word of caution when running the command; if you don’t supply the —file parameter, it merges the config into the default ~/.kube/config file. If you want to use the credentials for automation purposes, it’s best to have them in a separate file, otherwise there could be a security risk.

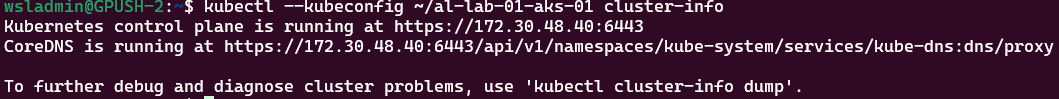

Here we’re proving that we’re using the accessible URL via the config file that’s just been created to query the API for the cluster info:

Using SSH connecting to control-plane node

The second option is to SSH to one of the control-plane nodes to retrieve the details. This is a bit more involved and assumes you have the Private SSH key file, assuming you provided it at deployment time, or retrieved the one created for you. You will also need to know the IP address of one of the nodes, and there’s no way to get this from the Azure portal, so will have to use kubectl .

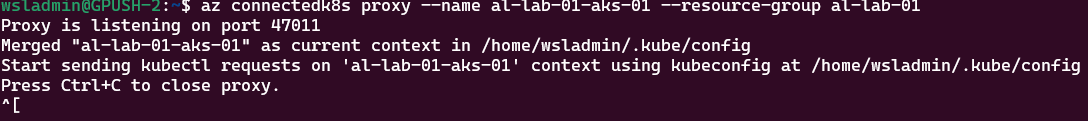

az connectedk8s proxy --name <myResourceGroup> --resource-group <myAKSCLuster>From a new shell, run:

# Prove we're connected to the AKS cluster via the proxy

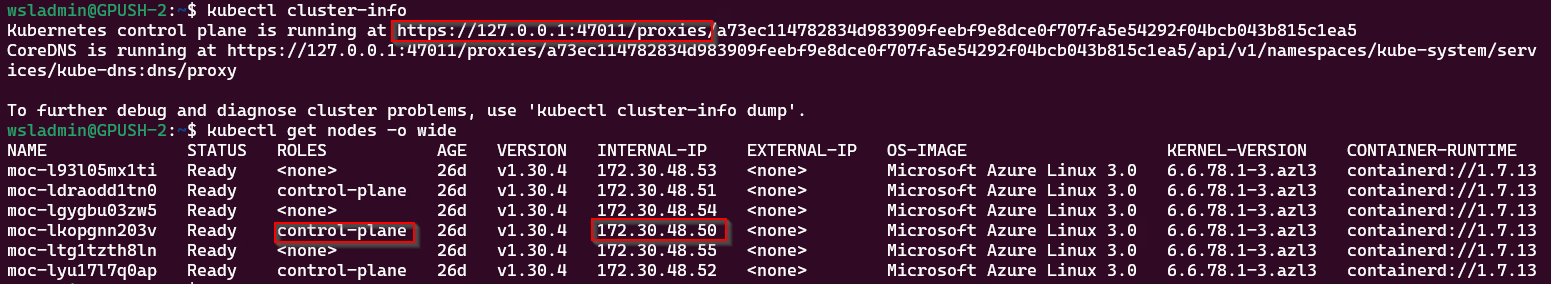

kubectl cluster-info

# Get the node information and IP addresses

kubectl get nodes -o wideWe can see the cluster is connected via the proxy session and a list of all the cluster nodes. We want to connect to a control-plane node, so select one that has that role and make a note of the internal IP address.

Use the following command to connect to the node:

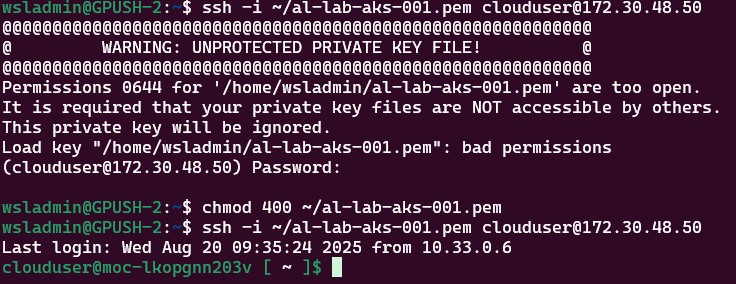

ssh -i <location of ssh private key file> clouduser@<control-plane ip>

# If you get an error regarding permissions to key file being too open, run:

chmod 400 <location of ssh private key file>Now we’re in, we can exit the session as we know that works and use the following command to write the output to a file on your local system:

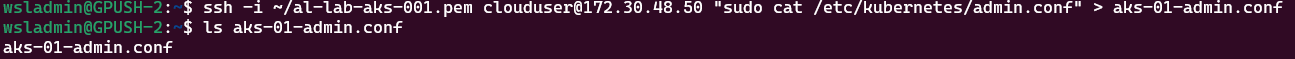

ssh -i <location of ssh private key file> clouduser@<control-plane ip> "sudo cat /etc/kubernetes/admin.conf" > aks-01-admin.confNow we can quit our proxy session and test connectivity using the file retrieved from the node:

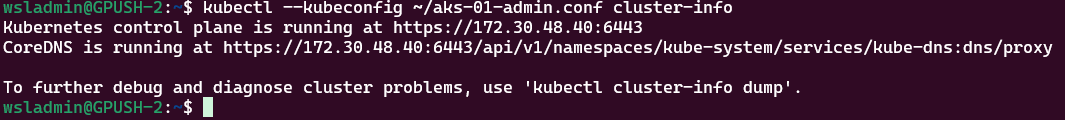

kubectl --kubeconfig ~/aks-01-admin.conf cluster-infoRunning a debug container on one of the control-plane nodes

You can use this method if you don’t have the private SSH key to connect to the node directly.

We need to use make a proxy connection to the cluster first of all to be able to run the kubectl commands

az connectedk8s proxy --name <myResourceGroup> --resource-group <myAKSCLuster>

# Prove we're connected to the AKS cluster via the proxy

kubectl cluster-info

# Get the node information and IP addresses

kubectl get nodes -o wideFrom the node output, we want to get the name of one of the control-plane nodes and use that in the following commands:

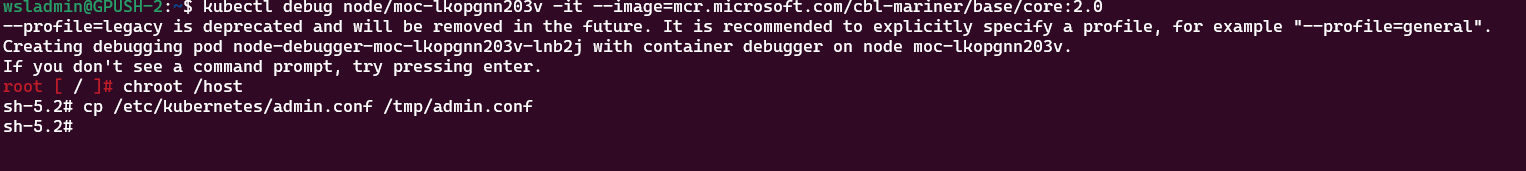

kubectl debug node/control-plane-node -it --image=mcr.microsoft.com/cbl-mariner/base/core:2.0

# use chroot to use the host file system

chroot /host

# copy the file to /tmp

cp /etc/kubernetes/admin.conf /tmp/admin.confKeep the debug session open and from another shell run the following to copy the file from the debug container to your local machine:

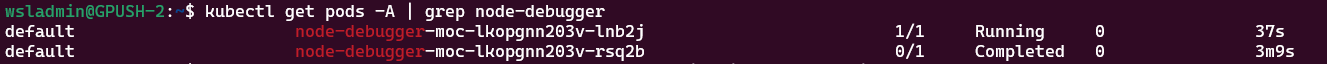

#get the name of the running debug container

kubectl get pods -A | grep node-debugger

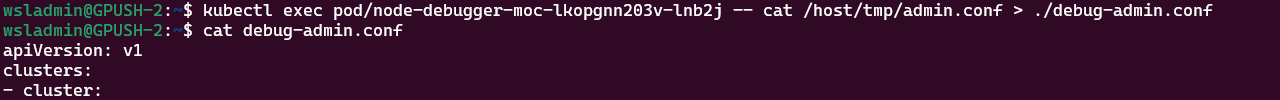

#copy the tmp file:

kubectl exec pod/node-debugger-moc-lkopgnn203v-lnb2j -- cat /host/tmp/admin.conf > ./debug-admin.confGo back to the debug shell and exit from it.

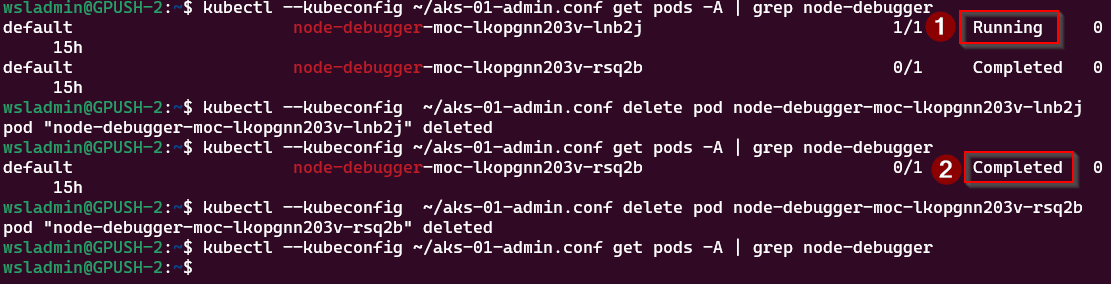

Once you’ve finished with the debug container, you can remove the pod from the cluster:

# Get the name of debug pods

kubectl --kubeconfig ~/aks-01-admin.conf get pods -A | grep node-debugger

# Delete debug pod

kubectl --kubeconfig ~/aks-01-admin.conf delete pod <node-debugger-pod-name>You can see in the screenshot above that I had 2 debug sessions listed, one running and the other completed. The running session is because I didn’t exit from the pod, and went off to do something else (oops!). Not to worry, deleting the pod sorts that out.

Conclusion

Clearly, the easiest way to retrieve the admin credentials is to use method one, use the Azure CLI aksarc extension to retrieve them. I wanted to show the other methods, though, as a reference on how to connect to the control-plane pods and retrieve a file.

Topic Search

Posts by Date

- August 2025 1

- March 2025 1

- February 2025 1

- October 2024 1

- August 2024 1

- July 2024 1

- October 2023 1

- September 2023 1

- August 2023 3

- July 2023 1

- June 2023 2

- May 2023 1

- February 2023 3

- January 2023 1

- December 2022 1

- November 2022 3

- October 2022 7

- September 2022 2

- August 2022 4

- July 2022 1

- February 2022 2

- January 2022 1

- October 2021 1

- June 2021 2

- February 2021 1

- December 2020 2

- November 2020 2

- October 2020 1

- September 2020 1

- August 2020 1

- June 2020 1

- May 2020 2

- March 2020 1

- January 2020 2

- December 2019 2

- November 2019 1

- October 2019 7

- June 2019 2

- March 2019 2

- February 2019 1

- December 2018 3

- November 2018 1

- October 2018 4

- September 2018 6

- August 2018 1

- June 2018 1

- April 2018 2

- March 2018 1

- February 2018 3

- January 2018 2

- August 2017 5

- June 2017 2

- May 2017 3

- March 2017 4

- February 2017 4

- December 2016 1

- November 2016 3

- October 2016 3

- September 2016 5

- August 2016 11

- July 2016 13