Deploying ASDK 2206 to an Azure VM

Azure Stack Hub version 2206 was release a couple of months ago, but anyone trying to deploy ASDK 2206 to Azure will have found that the latest version is 2108. Until the official method is updated, here’s how you can do it .

I’m using the awesome scripts by Yagmur Sahin as the basis for the solution: https://github.com/yagmurs/AzureStack-VM-PoC

Open the Azure portal and then create a PowerShell Cloud Shell.

I recommend you reset the user settings, as there can be issues with versions of the Azure PowerShell modules.

Run the following command in the new PowerShell session:

git clone https://github.com/dmc-tech/AzureStack-VM-PoC.gitRun the following, changing to meet your requirements. VirtualMachineSize can be from the following sizes:

"Standard_E32s_v3",

"Standard_E48s_v3"

cd ./AzureStack-VM-PoC/ARMv2

$ResourceGroupName = 'asdk01-uks'

$Region = 'uk south'

$VirtualMachineSize = 'Standard_E48s_v3'

$DataDiskCount = 11

./Deploy-AzureStackonAzureVM.ps1 -ResourceGroupName $ResourceGroupName -Region $Region -VirtualMachineSize $VirtualMachineSize -DataDiskCount $DataDiskCountThe configuration example above has enough resources to run an OpenShift cluster.

Running the script will initially:

Create a resource group

Create a storage account

copy the ASDK 2206 VHD image to the storage account

Create the VM using the VHD image

Create a Public IP for the VM

Note: As part of the provisioning process, the admin user account you specify gets changed to ‘Administrator’. I would Strongly recommend removing the Public IP associated with the VM and deploy Azure Bastion to protect your ASDK instance

Once the ASDK VM has been provisioned, connect to it (Bastion or RDP). The username you specified previously is ignored, so use ‘Administrator’ as the user and enter the password you defined.

Once connected, open a PowerShell window (as Administrator), and run the following as an example (I’m using ADFS as I’m simulating a disconnected environment)

C:\CloudDeployment\Setup\InstallAzureStackPOC.ps1 -TimeServer '129.6.15.28' -DNSForwarder '8.8.8.8' -UseADFSYou’ll then need to enter the AdminPassword when prompted, and then the script will do it’s magic (as long as the password is correct!) and take a number of hours to install.

The above recording shows the first few minutes of the script (sped-up! :) ).

After a few hours, the VM will reboot. If you want to check progress, you should use the following username to connect:

azurestackadmin@azurestack.local

Use the password you initially defined

Here’s some of the output you’ll see from PowerShell if you do connect as azurestackadmin (there’s still a few hours left to go!)

After 7hours 25 minutes, the install completed. You can determine this from the following log entry:

To prove that version 2206 has been installed, open the admin portal and check the properties for the region/instance.

As I used ADFS for this example, I had to login as cloudadmin@azurestack.local. If using AAD use the account you define when initially running the setup script.

Hope that helps if you want to deploy version 2206 as well as a simplified deployment tutorial.

Footnote: I tried using v5 series VM’s to deploy ASDK on, but it failed due to a network issue. I assume it is due to a different NIC/drive being used than the v3 series.

Azure Arc Connected K8s Billing usage workbook error - 'union' operator: Failed to resolve table expression named 'ContainerLogV2'

Using ‘Container Insights’ for Kubernetes can get expensive, I recently saw a customer creating 19TB of logs a month from logging container insights from an AKS cluster.

You may want to check what logs you are capturing as suggested through Microsoft guides Monitoring cost for Container insights - Azure Monitor | Microsoft Docs which suggests one of the first steps to check billing usage.

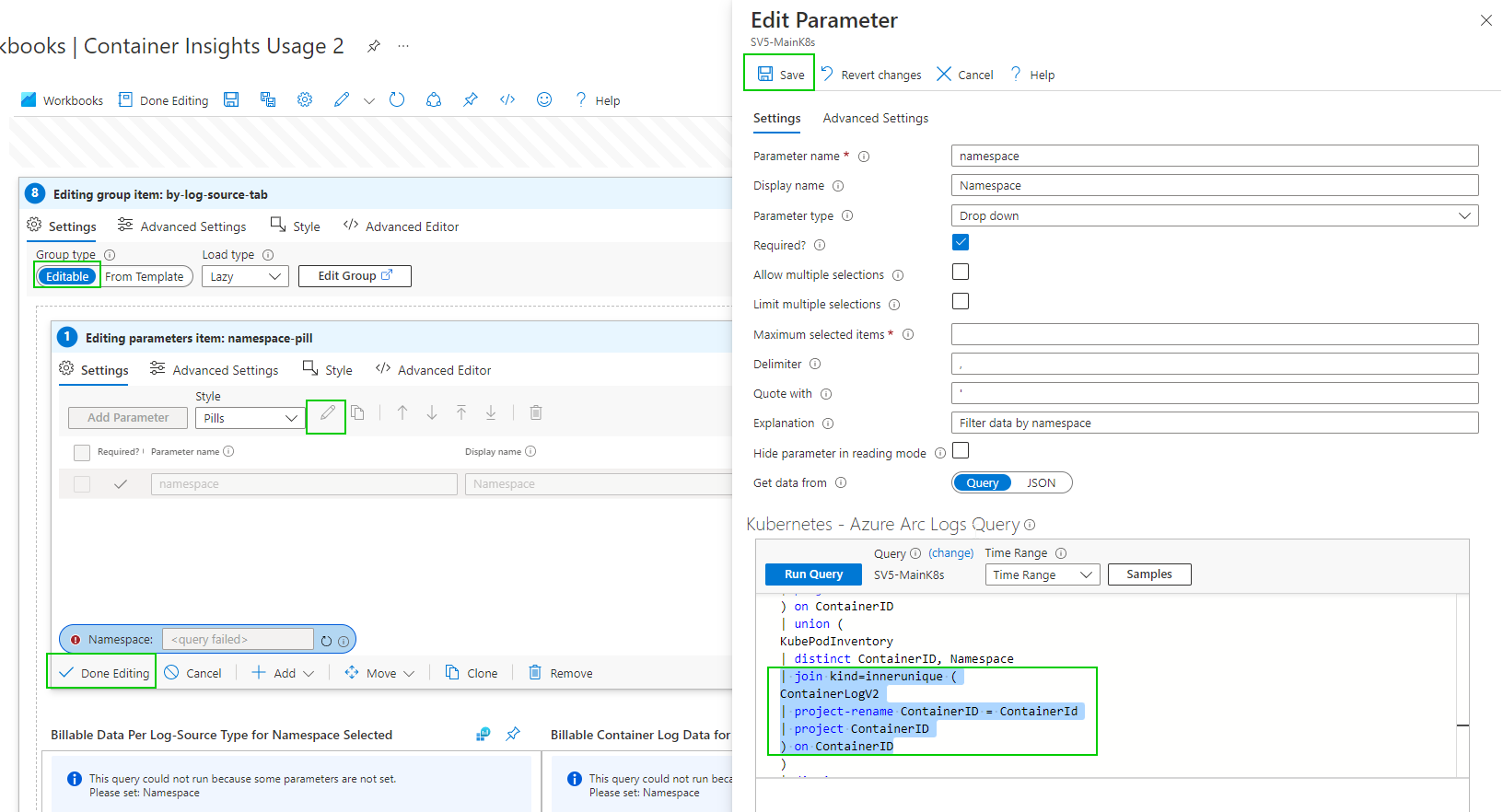

As of writing this if you enable container insights and try to view Data Usage you get an error. ‘union' operator: Failed to resolve table expression named 'ContainerLogV2’. ContainerLogV2 is in preview or if you are just aren’t ready to deploy that, here is how to fix the report manually. I also have included the Full report at the end.

However, depending on when you deploy this and how you deployed insight metrics you may hit this error.

As of writing this, ContainerLogV2 is in preview and you can follow this guide to deploy it. Configure the ContainerLogV2 schema (preview) for Container Insights - Azure Monitor | Microsoft Docs. If you want to gain some quick insight into your usage you can edit the workbook. We just need to get through the maze of edits to get to the underlying query.

Select ‘Azure Arc’ services

select ‘Kubernetes clusters’ under infrastructure

select your K8s cluster

select ‘Workbooks’ under Monitoring

select ‘Container Insights Usage’ workbook

you can either edit this one or ‘Save As’ and create a new name. I will create a copy.

Open your chosen workbook

you can now edit the group item.

by Namespace

Editing the Namespace selection Dropdown parameter query

Hopefully, you get the gist of the process. There are a few more queries to edit.

And

This will now work for Data Usage. This is likely a temporary solution until ContainerLogV2 comes out of preview. Configure the ContainerLogV2 schema (preview) for Container Insights - Azure Monitor | Microsoft Docs

Gallery Template code

{

"version": "Notebook/1.0",

"items": [

{

"type": 9,

"content": {

"version": "KqlParameterItem/1.0",

"crossComponentResources": [

"{resource}"

],

"parameters": [

{

"id": "670aac26-0ffe-4f29-81c2-d48911bc64b6",

"version": "KqlParameterItem/1.0",

"name": "timeRange",

"label": "Time Range",

"type": 4,

"description": "Select time-range for data selection",

"isRequired": true,

"value": {

"durationMs": 21600000

},

"typeSettings": {

"selectableValues": [

{

"durationMs": 300000

},

{

"durationMs": 900000

},

{

"durationMs": 3600000

},

{

"durationMs": 14400000

},

{

"durationMs": 43200000

},

{

"durationMs": 86400000

},

{

"durationMs": 172800000

},

{

"durationMs": 259200000

},

{

"durationMs": 604800000

},

{

"durationMs": 1209600000

},

{

"durationMs": 2419200000

},

{

"durationMs": 2592000000

},

{

"durationMs": 5184000000

},

{

"durationMs": 7776000000

}

],

"allowCustom": true

}

},

{

"id": "bfc96857-81df-4f0d-b958-81f96d28ddeb",

"version": "KqlParameterItem/1.0",

"name": "resource",

"type": 5,

"isRequired": true,

"isHiddenWhenLocked": true,

"typeSettings": {

"additionalResourceOptions": [

"value::1"

],

"showDefault": false

},

"timeContext": {

"durationMs": 21600000

},

"timeContextFromParameter": "timeRange",

"defaultValue": "value::1"

},

{

"id": "8d48ec94-fde6-487c-98bf-f1295f5d8b81",

"version": "KqlParameterItem/1.0",

"name": "resourceType",

"type": 7,

"description": "Resource type of resource",

"isRequired": true,

"query": "{\"version\":\"1.0.0\",\"content\":\"\\\"{resource:resourcetype}\\\"\",\"transformers\":null}",

"isHiddenWhenLocked": true,

"typeSettings": {

"additionalResourceOptions": [

"value::1"

],

"showDefault": false

},

"timeContext": {

"durationMs": 21600000

},

"timeContextFromParameter": "timeRange",

"defaultValue": "value::1",

"queryType": 8

},

{

"id": "1b826776-ab99-45ab-86db-cced05e8b36d",

"version": "KqlParameterItem/1.0",

"name": "clusterId",

"type": 1,

"description": "Used to identify the cluster name when the cluster type is AKS Engine",

"isHiddenWhenLocked": true,

"timeContext": {

"durationMs": 0

},

"timeContextFromParameter": "timeRange"

},

{

"id": "67227c35-eab8-4518-9212-1c3c3d564b20",

"version": "KqlParameterItem/1.0",

"name": "masterNodeExists",

"type": 1,

"query": "let MissingTable = view () { print isMissing=1 };\r\nlet masterNodeExists = toscalar(\r\nunion isfuzzy=true MissingTable, (\r\nAzureDiagnostics \r\n| getschema \r\n| summarize c=count() \r\n| project isMissing=iff(c > 0, 0, 1)\r\n) \r\n| top 1 by isMissing asc\r\n);\r\nprint(iif(masterNodeExists == 0, 'yes', 'no'))\r\n",

"crossComponentResources": [

"{resource}"

],

"isHiddenWhenLocked": true,

"timeContext": {

"durationMs": 0

},

"timeContextFromParameter": "timeRange",

"queryType": 0,

"resourceType": "{resourceType}"

}

],

"style": "pills",

"queryType": 0,

"resourceType": "{resourceType}"

},

"name": "pills"

},

{

"type": 1,

"content": {

"json": "Please note that the Container Insights Usage workbook for AKS Engine clusters shows your billable data usage for your cluster's entire workspace ({resource:name}), and not just the cluster itself ({clusterId}). ",

"style": "info"

},

"conditionalVisibility": {

"parameterName": "resourceType",

"comparison": "isEqualTo",

"value": "microsoft.operationalinsights/workspaces"

},

"name": "aks-engine-billable-data-shown-applies-to-entire-workspace-not-just-the-cluster-info-message",

"styleSettings": {

"showBorder": true

}

},

{

"type": 11,

"content": {

"version": "LinkItem/1.0",

"style": "tabs",

"links": [

{

"id": "3b7d39f2-38c5-4586-a155-71e28e333020",

"cellValue": "selectedTab",

"linkTarget": "parameter",

"linkLabel": "Overview",

"subTarget": "overview",

"style": "link"

},

{

"id": "7163b764-7ab2-48b3-b417-41af3eea7ef0",

"cellValue": "selectedTab",

"linkTarget": "parameter",

"linkLabel": "By Table",

"subTarget": "table",

"style": "link"

},

{

"id": "960bbeea-357f-4c07-bdd9-6f3f123d56fd",

"cellValue": "selectedTab",

"linkTarget": "parameter",

"linkLabel": "By Namespace",

"subTarget": "namespace",

"style": "link"

},

{

"id": "7fbcc5bd-62d6-4aeb-b55e-7b1fab65716a",

"cellValue": "selectedTab",

"linkTarget": "parameter",

"linkLabel": "By Log Source",

"subTarget": "logSource",

"style": "link"

},

{

"id": "3434a63b-f3d5-4c11-9620-1e731983041c",

"cellValue": "selectedTab",

"linkTarget": "parameter",

"linkLabel": "By Diagnostic Master Node ",

"subTarget": "diagnosticMasterNode",

"style": "link"

}

]

},

"name": "tabs"

},

{

"type": 1,

"content": {

"json": "Due to querying limitation on Basic Logs, if you are using Basic Logs, this workbook provides partial data on your container log usage.",

"style": "info"

},

"conditionalVisibility": {

"parameterName": "selectedTab",

"comparison": "isEqualTo",

"value": "overview"

},

"name": "basic-logs-info-text"

},

{

"type": 12,

"content": {

"version": "NotebookGroup/1.0",

"groupType": "editable",

"title": "Container Insights Billing Usage",

"items": [

{

"type": 1,

"content": {

"json": "<br/>\r\nThis workbook provides you a summary and source for billable data collected by [Container Insights solution.](https://docs.microsoft.com/en-us/azure/azure-monitor/insights/container-insights-overview)\r\n\r\nThe best way to understand how Container Insights ingests data in Log Analytics workspace is from this [article.](https://medium.com/microsoftazure/azure-monitor-for-containers-optimizing-data-collection-settings-for-cost-ce6f848aca32)\r\n\r\nIn this workbook you can:\r\n\r\n* View billable data ingested by **solution**.\r\n* View billable data ingested by **Container logs (application logs)**\r\n* View billable container logs data ingested segregated by **Kubernetes namespace**\r\n* View billable container logs data ingested segregated by **Cluster name**\r\n* View billable container log data ingested by **logsource entry**\r\n* View billable diagnostic data ingested by **diagnostic master node logs**\r\n\r\nYou can fine tune and control logging by turning off logging on the above mentioned vectors. [Learn how to fine-tune logging](https://docs.microsoft.com/en-us/azure/azure-monitor/insights/container-insights-agent-config)\r\n\r\nYou can control your master node's logs by updating diagnostic settings. [Learn how to update diagnostic settings](https://docs.microsoft.com/en-us/azure/aks/view-master-logs)"

},

"name": "workbooks-explanation-text"

},

{

"type": 1,

"content": {

"json": "`Master node logs` are not enabled. [Learn how to enable](https://docs.microsoft.com/en-us/azure/aks/view-master-logs)"

},

"conditionalVisibility": {

"parameterName": "masterNodeExists",

"comparison": "isEqualTo",

"value": "no"

},

"name": "master-node-logs-are-not-enabled-msg"

}

]

},

"conditionalVisibility": {

"parameterName": "selectedTab",

"comparison": "isEqualTo",

"value": "overview"

},

"name": "workbook-explanation"

},

{

"type": 12,

"content": {

"version": "NotebookGroup/1.0",

"groupType": "editable",

"items": [

{

"type": 3,

"content": {

"version": "KqlItem/1.0",

"query": "union withsource = SourceTable AzureDiagnostics, AzureActivity, AzureMetrics, ContainerLog, Perf, KubePodInventory, ContainerInventory, InsightsMetrics, KubeEvents, KubeServices, KubeNodeInventory, ContainerNodeInventory, KubeMonAgentEvents, ContainerServiceLog, Heartbeat, KubeHealth, ContainerImageInventory\r\n| where _IsBillable == true\r\n| project _BilledSize, TimeGenerated, SourceTable\r\n| summarize BillableDataBytes = sum(_BilledSize) by bin(TimeGenerated, {timeRange:grain}), SourceTable\r\n| render piechart\r\n\r\n\r\n",

"size": 3,

"showAnnotations": true,

"showAnalytics": true,

"title": "Billable Data from Container Insights",

"timeContextFromParameter": "timeRange",

"queryType": 0,

"resourceType": "{resourceType}",

"crossComponentResources": [

"{resource}"

],

"chartSettings": {

"xAxis": "TimeGenerated",

"createOtherGroup": 100,

"seriesLabelSettings": [

{

"seriesName": "LogManagement",

"label": "LogManagementSolution(GB)"

},

{

"seriesName": "ContainerInsights",

"label": "ContainerInsightsSolution(GB)"

}

],

"ySettings": {

"numberFormatSettings": {

"unit": 2,

"options": {

"style": "decimal"

}

}

}

}

},

"customWidth": "50",

"showPin": true,

"name": "billable-data-from-ci"

}

]

},

"conditionalVisibility": {

"parameterName": "selectedTab",

"comparison": "isEqualTo",

"value": "table"

},

"name": "by-datatable-tab"

},

{

"type": 12,

"content": {

"version": "NotebookGroup/1.0",

"groupType": "editable",

"items": [

{

"type": 3,

"content": {

"version": "KqlItem/1.0",

"query": "KubePodInventory\r\n| distinct ContainerID, Namespace\r\n| join kind=innerunique (\r\nContainerLog\r\n| where _IsBillable == true\r\n| summarize BillableDataBytes = sum(_BilledSize) by ContainerID\r\n) on ContainerID\r\n| union (\r\nKubePodInventory\r\n| distinct ContainerID, Namespace\r\n)\r\n| summarize Total=sum(BillableDataBytes) by Namespace\r\n| render piechart\r\n",

"size": 3,

"showAnalytics": true,

"title": "Billable Container Log Data Per Namespace",

"timeContextFromParameter": "timeRange",

"queryType": 0,

"resourceType": "{resourceType}",

"crossComponentResources": [

"{resource}"

],

"tileSettings": {

"showBorder": false,

"titleContent": {

"columnMatch": "Namespace",

"formatter": 1

},

"leftContent": {

"columnMatch": "Total",

"formatter": 12,

"formatOptions": {

"palette": "auto"

},

"numberFormat": {

"unit": 17,

"options": {

"maximumSignificantDigits": 3,

"maximumFractionDigits": 2

}

}

}

},

"graphSettings": {

"type": 0,

"topContent": {

"columnMatch": "Namespace",

"formatter": 1

},

"centerContent": {

"columnMatch": "Total",

"formatter": 1,

"numberFormat": {

"unit": 17,

"options": {

"maximumSignificantDigits": 3,

"maximumFractionDigits": 2

}

}

}

},

"chartSettings": {

"createOtherGroup": 100,

"ySettings": {

"numberFormatSettings": {

"unit": 2,

"options": {

"style": "decimal"

}

}

}

}

},

"name": "billable-data-per-namespace"

}

]

},

"conditionalVisibility": {

"parameterName": "selectedTab",

"comparison": "isEqualTo",

"value": "namespace"

},

"name": "by-namespace-tab"

},

{

"type": 12,

"content": {

"version": "NotebookGroup/1.0",

"groupType": "editable",

"items": [

{

"type": 9,

"content": {

"version": "KqlParameterItem/1.0",

"crossComponentResources": [

"{resource}"

],

"parameters": [

{

"id": "d5701caa-0486-4e6f-adad-6fa5b8496a7d",

"version": "KqlParameterItem/1.0",

"name": "namespace",

"label": "Namespace",

"type": 2,

"description": "Filter data by namespace",

"isRequired": true,

"query": "KubePodInventory\r\n| distinct ContainerID, Namespace\r\n| join kind=innerunique (\r\nContainerLog\r\n| project ContainerID\r\n) on ContainerID\r\n| union (\r\nKubePodInventory\r\n| distinct ContainerID, Namespace\r\n)\r\n| distinct Namespace\r\n| project value = Namespace, label = Namespace, selected = false\r\n| sort by label asc",

"crossComponentResources": [

"{resource}"

],

"typeSettings": {

"additionalResourceOptions": [

"value::1"

],

"showDefault": false

},

"timeContext": {

"durationMs": 0

},

"timeContextFromParameter": "timeRange",

"defaultValue": "value::1",

"queryType": 0,

"resourceType": "{resourceType}"

}

],

"style": "pills",

"queryType": 0,

"resourceType": "{resourceType}"

},

"name": "namespace-pill"

},

{

"type": 3,

"content": {

"version": "KqlItem/1.0",

"query": "KubePodInventory\r\n| where Namespace == '{namespace}'\r\n| distinct ContainerID, Namespace\r\n| join hint.strategy=shuffle (\r\nContainerLog\r\n| where _IsBillable == true\r\n| summarize BillableDataBytes = sum(_BilledSize) by LogEntrySource, ContainerID\r\n) on ContainerID\r\n| union (\r\nKubePodInventory\r\n| where Namespace == '{namespace}'\r\n| distinct ContainerID, Namespace\r\n)\r\n| extend sourceNamespace = strcat(LogEntrySource, \"/\", Namespace)\r\n| summarize Total=sum(BillableDataBytes) by sourceNamespace\r\n| render piechart",

"size": 0,

"showAnnotations": true,

"showAnalytics": true,

"title": "Billable Data Per Log-Source Type for Namespace Selected",

"timeContextFromParameter": "timeRange",

"queryType": 0,

"resourceType": "{resourceType}",

"crossComponentResources": [

"{resource}"

],

"chartSettings": {

"createOtherGroup": 100,

"ySettings": {

"numberFormatSettings": {

"unit": 2,

"options": {

"style": "decimal"

}

}

}

}

},

"customWidth": "35",

"showPin": true,

"name": "billable-data-per-log-source",

"styleSettings": {

"showBorder": true

}

},

{

"type": 3,

"content": {

"version": "KqlItem/1.0",

"query": "KubePodInventory\r\n| where Namespace == '{namespace}'\r\n| distinct ContainerID, Namespace\r\n| project ContainerID\r\n| join hint.strategy=shuffle ( \r\nContainerLog\r\n| project _BilledSize, ContainerID, TimeGenerated, LogEntrySource\r\n) on ContainerID\r\n| union (\r\nKubePodInventory\r\n| where Namespace == '{namespace}'\r\n| distinct ContainerID, Namespace\r\n| project ContainerID\r\n)\r\n| summarize ContainerLogData = sum(_BilledSize) by bin(TimeGenerated, {timeRange:grain}), LogEntrySource\r\n| render linechart\r\n\r\n",

"size": 0,

"showAnnotations": true,

"showAnalytics": true,

"title": "Billable Container Log Data for Namespace Selected",

"timeContextFromParameter": "timeRange",

"queryType": 0,

"resourceType": "{resourceType}",

"crossComponentResources": [

"{resource}"

],

"chartSettings": {

"xSettings": {},

"ySettings": {

"numberFormatSettings": {

"unit": 2,

"options": {

"style": "decimal"

}

}

}

}

},

"customWidth": "65",

"conditionalVisibility": {

"parameterName": "namespace:label",

"comparison": "isNotEqualTo",

"value": "<unset>"

},

"showPin": true,

"name": "billable-container-log-data-for-namespace-selected",

"styleSettings": {

"showBorder": true

}

}

]

},

"conditionalVisibility": {

"parameterName": "selectedTab",

"comparison": "isEqualTo",

"value": "logSource"

},

"name": "by-log-source-tab"

},

{

"type": 12,

"content": {

"version": "NotebookGroup/1.0",

"groupType": "editable",

"items": [

{

"type": 1,

"content": {

"json": "`Master node logs` are not enabled. [Learn how to enable](https://docs.microsoft.com/en-us/azure/aks/view-master-logs)"

},

"conditionalVisibility": {

"parameterName": "masterNodeExists",

"comparison": "isEqualTo",

"value": "no"

},

"name": "master-node-logs-are-not-enabled-msg-under-masternode-tab"

},

{

"type": 3,

"content": {

"version": "KqlItem/1.0",

"query": "AzureDiagnostics\r\n| summarize Total=sum(_BilledSize) by bin(TimeGenerated, {timeRange:grain}), Category\r\n| render barchart\r\n\r\n\r\n",

"size": 0,

"showAnnotations": true,

"showAnalytics": true,

"title": "Billable Diagnostic Master Node Log Data",

"timeContextFromParameter": "timeRange",

"queryType": 0,

"resourceType": "{resourceType}",

"crossComponentResources": [

"{resource}"

],

"chartSettings": {

"ySettings": {

"unit": 2,

"min": null,

"max": null

}

}

},

"customWidth": "75",

"conditionalVisibility": {

"parameterName": "masterNodeExists",

"comparison": "isEqualTo",

"value": "yes"

},

"showPin": true,

"name": "billable-data-from-diagnostic-master-node-logs"

}

]

},

"conditionalVisibility": {

"parameterName": "selectedTab",

"comparison": "isEqualTo",

"value": "diagnosticMasterNode"

},

"name": "by-diagnostic-master-node-tab"

}

],

"fallbackResourceIds": [

"/subscriptions/357e7522-d1ad-433c-9545-7d8b8d72d61a/resourceGroups/ArcResources/providers/Microsoft.Kubernetes/connectedClusters/sv5-k8sdemo"

],

"fromTemplateId": "community-Workbooks/AKS/Billing Usage Divided",

"$schema": "https://github.com/Microsoft/Application-Insights-Workbooks/blob/master/schema/workbook.json"

}Deploying 100+ node MicroK8s Kubernetes Cluster connected to Azure with Arc

Using MAAS to deploy 121 physical nodes with ubuntu installing MicroK8s from the cloud-init and connecting the cluster to Azure using Azure Arc

How long would it take to deploy 100+ physical servers with Ubuntu, create a microK8s cluster and connect it to Azure using Azure Arc?

This post walks through the process of creating and connecting a MicroK8s cluster to Azure using Azure Arc, MAAS, Ubuntu, and Micok8s.

First need a primary server to start the K8s cluster so let’s deploy one

Lets create a Tag ‘AzureConnectedMicroK8s’ so we can keep track of the servers we are deploying

While we are waiting for the deployment let’s head over to MicroK8s - Zero-ops Kubernetes for developers, edge and IoT website, and let’s look up the commands for creating a MicroK8s cluster using snaps and we want to use a specific version 1.24

sudo apt-get update

sudo apt-get upgrade -y

sudo snap install microk8s --classic --channel=1.24

sudo microk8s status --wait-readyWe need to extend the life of the join token so we reuse it for multiple servers MicroK8s - Command reference shows we do this with —token-ttl switch

sudo microk8s add-node --token-ttl 9999999I want to use the cloud-init commands with MAAS to deploy the Ubuntu and join it automatically to the microK8s cluster, so lets build that script. We will try two one the pull updates and one that doesn’t. Let’s Tag these 2 new nodes we are adding first.

#!/bin/bash

sudo apt-get update

sudo apt-get upgrade -y

sudo snap install microk8s --classic --channel=1.24

# updated content to include a random delay for adding lots of nodes

randomNumber=$((10 + $RANDOM % 240))

sleep randomNumber

sudo microk8s join 172.30.9.31:25000/8a4fb96dd8c711aaa895ba0da2e0dd91/fb637a6f6f64We can check the deployment and look for the additional nodes using kubectl command

# let’s create a Kube config file

mkdir ~/.kube

sudo microk8s kubectl config view --raw > $HOME/.kube/config

sudo usermod -aG microk8s $USER

# fix permissions for Helm warning

chmod go-r ~/.kube/config

sudo snap install kubectl --classic

kubectl version --client

kubectl cluster-info

# Add auto completion and Alias for kubectl

sudo apt-get install -y bash-completion

echo 'source <(kubectl completion bash)' >>~/.bashrc

echo 'alias k=kubectl' >>~/.bashrc

echo 'complete -o default -F __start_kubectl k' >>~/.bashrc

# need to restart session to use for alias

kubectl get nodes

# using k Alias

k get nodes

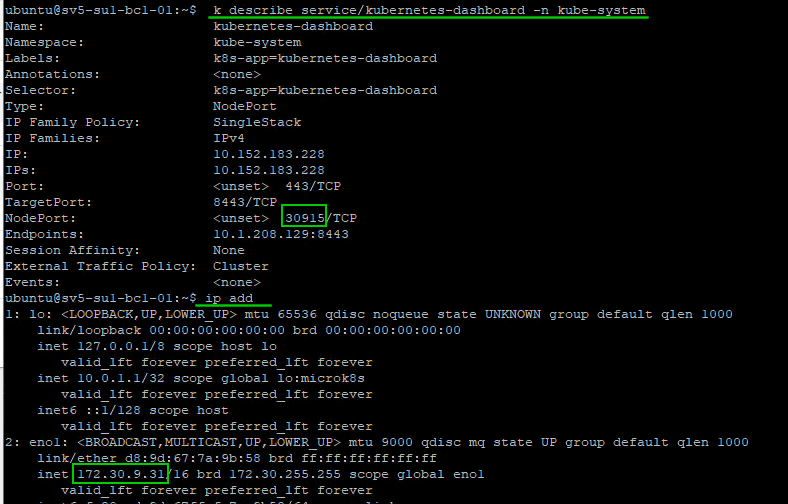

k get nodes -o widelets get K8s dashboard running

sudo microk8s enable dashboard ingress storage

# add MAAS DNS servers

sudo microk8s enable dns:172.30.0.30,172.30.0.32

# DNS trouble shooting

# https://kubernetes.io/docs/tasks/administer-cluster/dns-debugging-resolution/

k -n kube-system edit service kubernetes-dashboard

# change change type from ClusterPort to NodePort ~approx line 35

# save this token for later use

k create token -n kube-system default --duration=8544h

k describe service/kubernetes-dashboard -n kube-systemNext, let’s deploy some workers from MAAS and tag the servers accordingly

#!/bin/bash

sudo apt-get update

sudo apt-get upgrade -y

sudo snap install microk8s --classic --channel=1.24

sudo microk8s join 172.30.9.31:25000/8a4fb96dd8c711aaa895ba0da2e0dd91/fb637a6f6f64 --workerWhile waiting for there workers I am going to use choco to install octant. “choco install octant”. Create a copy of the linux kube-config. Creating a local ‘config’ file on my windows desktop in the user\.kube directory and then running octant.exe we can then access the dashboard via http://127.0.0.1:7777 which shows us the new workers we have just added.

Let’s work to connect the cluster to Azure using the following guide Quickstart: Connect an existing Kubernetes cluster to Azure Arc - Azure Arc | Microsoft Docs. First lets make sure the resource providers are registered

# Using Powershell

Get-AzResourceProvider -ProviderNamespace Microsoft.Kubernetes

Get-AzResourceProvider -ProviderNamespace Microsoft.KubernetesConfiguration

Get-AzResourceProvider -ProviderNamespace Microsoft.ExtendedLocationAdding a role assignment to my user account for ‘Kubernetes Cluster - Azure Arc Onboarding’ and ‘Azure Arc Kubernetes Cluster Admin’. Depending on your onboarding account and process this may vary.

# run on master node

sudo microk8s enable helm3

sudo snap install helm --classic

# install Azure CLI

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

az login

# User browser devicelogin code

az account show

# use 'az account set --subscription subname' to ensure you have correct subscription

az extension add --name connectedk8s

az extension add --name k8s-configuration

# using an existing resource group

az connectedk8s connect --name sv5-microk8s --resource-group ArcResources

# trouble shooting

# https://docs.microsoft.com/en-us/azure/azure-arc/kubernetes/troubleshooting#enable-custom-locations-using-service-principalWhile this is deploying you can open another connection and run

“k -n azure-arc get pods,deployments”

if Azure Arc is experiencing any errors trying to create the deployments you can query the pod logs using “k -n azure-arc logs <pod-name>”

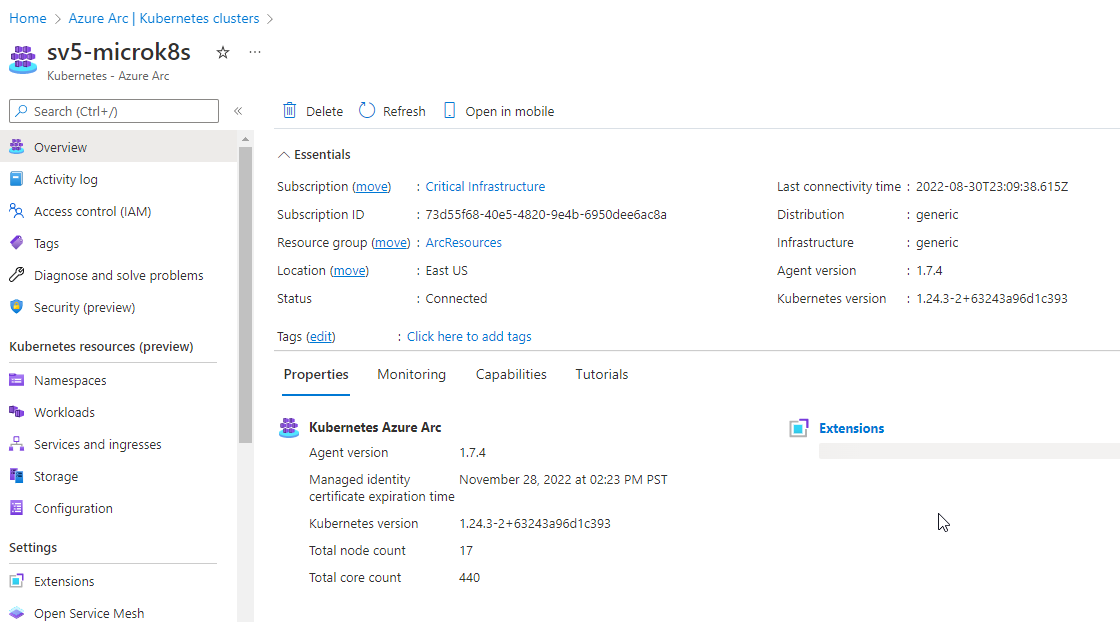

Checking Azure Resource Group we can see the resource being created and can see the total node count of 7, and the total core count of 160.

using the token we saved you can now sign into the on-premise cluster from Azure

You can now explore the namespaces, workloads, services, etc from the Azure Portal

Lets scale this out and show you how easily you can create a large-scale K8s cluster using your old hardware managed by MAAS and connected to Azure using Azure Arc. Using the same work join command as before in the cloud-init script lets deploy 10 new physical servers from MAAS and add them directly to the K8s cluster as workers.

And we can see the node count has increased to 17.

I do expect some failures here with servers as we are still onboarding some hardware and cleaning up issues but let’s grab all the ready servers and see how well Ubuntu, MAAS, MicroK8s, and Azure Arc can handle this scale out.

There Obviously adding that many servers can stress the system. Some errors around pulling the images for the networking, but just leaving them for a few hours they self-corrected and completed the deployment, and joined the cluster successfully.

We do have some internal MTU sizing issues in the switches so not all hosts were added. Looking at the Daemon sets under workloads we can see the calico and nginx pods 121 ready out of 121 deployed.

Looking at the node count we have 121 nodes added and 5452 CPU cores ready to work. That’s a lot of power you can now leverage to run container-based deployments.

Overall this was fairly easy to reuse a wide range of new and old hardware and create a large Kubernetes cluster and connect it to the Azure Portal via Azure Arc. This cluster could be used for a wide range of services including custom container hosting or Azure delivered services like Azure SQL or Machine learning.

We will continue to explore Azure Arc-connected services.

Explore

Quickstart: Connect an existing Kubernetes cluster to Azure Arc - Azure Arc | Microsoft Docs

MAAS (Metal-as-a-Service) Full HA Installation — Crying Cloud

Configure Kubernetes cluster - Azure Machine Learning | Microsoft Docs

Supporting Content

Update Management Center and Azure Arc for Linux Server Patch Management

Let’s say you want to redeploy some of your on-premises servers for a Kubernetes cluster or LXD cluster. In our MAAS portal we can select the appropriate ‘Ready’ systems we want to deploy. In this demonstration we have a range of different hardware selected here, an HP blade 460c, a dell blade M630, a Cisco C220, and 2 Quanta boxes.

Select desired OS and Release

check ‘Cloud-init user-data

Paste in the Azure Arc Connected script. You need to include the bash header ‘#!/bin/bash’.

Start deployment

Linux Bash script for reference. This was generated by the Azure Portal using an onboarding agent. You can find more details about this here Azure Arc & Automanage for MAAS — Crying Cloud

#!/bin/bash

# Add the service principal application ID and secret here

servicePrincipalClientId="xxxxxx-xxx-xxx-xxx-xxxxxxx"

servicePrincipalSecret="xxxxxxxxxxxxxxxxxxxxxxxx"

export subscriptionId=xxxxxx-xxxxx-xxx-xxx-xxxxxx

export resourceGroup=ArcResources

export tenantId=xxxxx-xxxx-xxx-xxxx-xxxxxx

export location=eastus

export authType=principal

export correlationId=d208f5b6-cae7-4dfe-8dcd-xxxxxx

export cloud=AzureCloud

# Download the installation package

output=$(wget https://aka.ms/azcmagent -O ~/install_linux_azcmagent.sh 2>&1)

if [ $? != 0 ]; then wget -qO- --method=PUT --body-data="{\"subscriptionId\":\"$subscriptionId\",\"resourceGroup\":\"$resourceGroup\",\"tenantId\":\"$tenantId\",\"location\":\"$location\",\"correlationId\":\"$correlationId\",\"authType\":\"$authType\",\"messageType\":\"DownloadScriptFailed\",\"message\":\"$output\"}" https://gbl.his.arc.azure.com/log &> /dev/null; fi

echo "$output"

# Install the hybrid agent

bash ~/install_linux_azcmagent.sh

# Run connect command

sudo azcmagent connect --service-principal-id "$servicePrincipalClientId" --service-principal-secret "$servicePrincipalSecret" --resource-group "$resourceGroup" --tenant-id "$tenantId" --location "$location" --subscription-id "$subscriptionId" --cloud "$cloud" --correlation-id "$correlationId"You may also find it useful to Tag the servers with a project name and possibly lock them.

Added a tag ‘ArcConnected’ and you can see all the other automatic tags added by MAAS

And we can see the servers locked in MAAS

Importantly you can see the servers added to Azure Portal as Arc Servers

Drilling into one of the servers we can see the name assigned by MAAS, the OS we chose to deploy, the hardware model, agent version, etc.

Depending on your needs you can do a range connect it to Azure ‘Automanage’ or to ‘Update management center’ for instance. Lets go ahead and configure patches through Update Management Center (currently in preview)

As the assessments finish, we can see the updates for the on-premise servers through the Azure portal for each of the servers

Update settings to Enable Periodic Assessment every 24 hours is optional

Next, we can ‘Schedule updates’ and create a repeating schedule

ensure that we select our on-premise servers, and define what type of patches. In this case we only want to push Critical Updates and Security patches. If you select other Linux patches Azure will patches things like snaps and you may want to do those type of patches in a more controlled manor.

You can browse the ‘Maintenance Configuration’ and make any necessary changes

We can validate update history using the portal also.

We have deployed Ubuntu servers using MAAS, connected them to Azure using Azure Arc during installation with scripted onboarding, viewed missing updates, scheduled daily assessments, and created a repeating schedule to ensure critical updates and security patches are pushed to these systems.

This method could be used to manage systems in any other cloud system, bringing the management of Linux patching into the Azure control plane

This is a small window into what can be done using Azure Arc to help with operational activities in a Hybrid cloud environment

Azure Arc & Automanage for MAAS

In a previous blog, MAAS (Metal-as-a-Service) Full HA Installation — Crying Cloud we deployed MAAS controllers to manage on-premise hardware. Let’s explore using the Azure platform to see what we can do with Azure Arc and Azure Automanage to monitor and keep our Metal-as-a-Service infrastructure systems operational.

From Azure Arc, we want to generate an onboarding script for multiple servers using a Service principal Connect hybrid machines to Azure at scale - Azure Arc | Microsoft Docs for Linux servers. We can now run the script on each of the Ubuntu MAAS controller

We now have an Azure Resource that represents our on-premise Linux server.

At the time of writing this if I use the ‘Automanage’ blade and try to use the built-in or Customer Automanage profile an error is displayed “Validation failed due to error, please try again and file a support case: TypeError: Cannot read properties of undefined (reading 'check')”

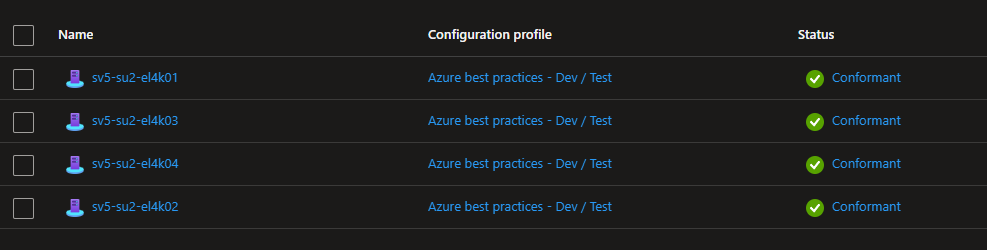

Try as I might, I could not get past this error. However, by going to each individual Arc server resource we can enable ‘Azure best practices - Dev / Test’ individually.

At the time of trying this Automanage is still in preview and I could not create and add a custom policy. For we can move ahead with the ‘Dev / Test’ policy which still validates the Azure services we want to enable.

Using the blade we can still see the summary activity using the ‘Automanage’ blade

I was exploring some configuration settings with the older agents and the Automanage and looks like some leftover configuration issues persist.

After removing the OMSForLinuxAgent and reinstalling the Arc Connected agent all servers showed as Conformant

As we build out lab more of the infrastructure we will continue to explore the uses of Azure Arc and Azure Automanage

Topic Search

Posts by Date

- August 2025 1

- March 2025 1

- February 2025 1

- October 2024 1

- August 2024 1

- July 2024 1

- October 2023 1

- September 2023 1

- August 2023 3

- July 2023 1

- June 2023 2

- May 2023 1

- February 2023 3

- January 2023 1

- December 2022 1

- November 2022 3

- October 2022 7

- September 2022 2

- August 2022 4

- July 2022 1

- February 2022 2

- January 2022 1

- October 2021 1

- June 2021 2

- February 2021 1

- December 2020 2

- November 2020 2

- October 2020 1

- September 2020 1

- August 2020 1

- June 2020 1

- May 2020 2

- March 2020 1

- January 2020 2

- December 2019 2

- November 2019 1

- October 2019 7

- June 2019 2

- March 2019 2

- February 2019 1

- December 2018 3

- November 2018 1

- October 2018 4

- September 2018 6

- August 2018 1

- June 2018 1

- April 2018 2

- March 2018 1

- February 2018 3

- January 2018 2

- August 2017 5

- June 2017 2

- May 2017 3

- March 2017 4

- February 2017 4

- December 2016 1

- November 2016 3

- October 2016 3

- September 2016 5

- August 2016 11

- July 2016 13