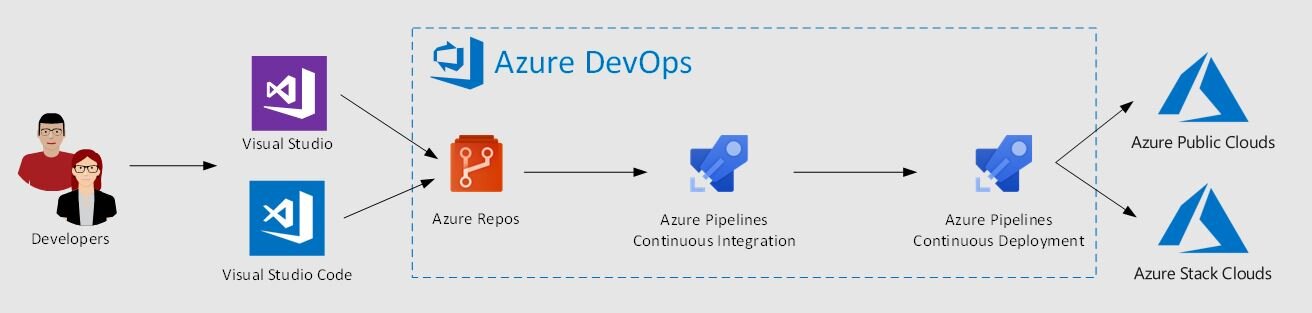

One of the key aspects of the system formally known as Azure Stack, now to be called Azure Stack Hub (ASH) is, that it is a target for automation. The chances are if you are considering running this at scale and you are deploying content through the portal UI, you’re probably doing it wrong.

ASH makes a great target for among other things a DevOps toolchain. I’m not sure if you have tried connecting from Azure DevOps portal but Azure DevOps has undergone a lot of changes and a lot of the documentation looks different.

I wanted to share the steps to create a ‘Service Connection’ from Azure DevOps. This is assuming you are using an Azure AD connected ASH Installation.

First, you’ll need to create a Service Principal in Azure AD.

The steps are shown here briefly below. Here are some detailed instructions if needed.

https://docs.microsoft.com/en-us/azure/active-directory/develop/howto-create-service-principal-portal

Navigate to your Azure AD and register a new application

Make sure to copy the Application ID or Client ID, the Directory ID or Tenant ID into some temporarily like notepad

Next click ‘Certificates & Secrets’

Select ‘+ New Client Secret’

Enter a description and key expiry length

Make sure to copy the secret

You need to add this SPN you have just created to your Azure Stack Subscription through the Access Control (IAM). At this stage you might want to capture the Azure Stack Subscription ID and name for later.

Regarding Azure DevOps I am going to assume you already have an organization and project configured.

If you go to the Project Settings page, under the Pipelines section,

then select 'Service Connections’

click ‘New service connection’

Finally we can create the ‘Service Connection’ using ‘Service Principal Authentication’

You need to click the hyperlink ‘Use the full version of the service connection dialog’

Select ‘Azure Stack’ from the drop down list (obviously Azure DevOps hasn’t been told about the name change just yet)

Complete the form and select ‘Verify Connection’

And you’re done | Good luck on your Pipeline