Here’s a quick post on installing ASDK 2008, as there seems to have been a recent change to the names of Azure AD roles that causes the ASDK install routine to fail when it is checking to see if the supplied Azure AD account has the correct permissions on the AAD tenant.

Following the installation docs: https://docs.microsoft.com/en-us/azure-stack/asdk/asdk-install?view=azs-2008, after a short time of running the PowerShell routine you will be prompted for the Azure AD global admin account. Once you’ve done this, it will be verified, but will fail with an error similar to below:

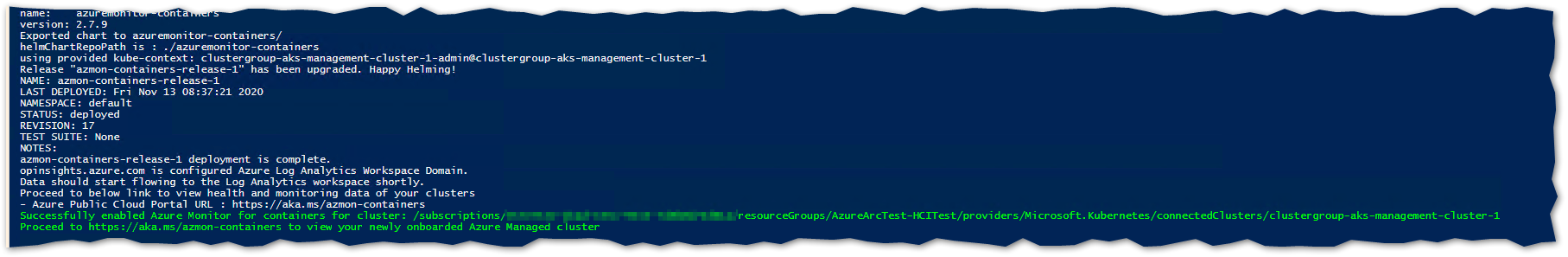

Get-AzureAdTenantDetails : The account you entered 'admin@contoso.onmicrosoft.com' is not an administrator of any Azure Active

Directory tenant.

At C:\CloudDeployment\Setup\Common\InstallAzureStackCommon.psm1:546 char:27

+ ... ntDetails = Get-AzureAdTenantDetails -AADAdminCredential $InfraAzureD ...

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : NotSpecified: (:) [Write-Error], WriteErrorException

+ FullyQualifiedErrorId : Microsoft.PowerShell.Commands.WriteErrorException,Get-AzureADTenantDetailsThe reason for this is that there is a function called Get-AADTenantDetail in the c:\CloudDeployment\Setup\Common\AzureADConfiguration.psm1 module that checks if the supplied account has the correct permissions. It currently checks for “Company Administrator”, but that name no longer exists, it should be changed to “Global Administrator” .

To fix this, edit c:\CloudDeployment\Setup\Common\AzureADConfiguration.psm1, and navigate to line 339. Make the following change:

$roleOid = Invoke-Graph -method Get -uri $getUri -authorization $authorization | Select-Object -ExpandProperty Value | Where displayName -EQ 'Global Administrator' | Select-Object -ExpandProperty objectIdNote: In order to see the module that needs the change, you have to run through the install routine once and let it fail, as it expands the setup directories from a NuGet package if they don’t exist.

If you re-run the install routine, it should get past this stage (assuming the account you provided is a global admin for the tenant :) )